The lines have officially been blurred with data privacy and the rise of generative AI technologies such as ChatGPT and Bing which present new challenges in terms of ownership of data and transparency requirements to the end user. But it’s not just about privacy, significant security challenges are posed by the learning alogorithms when pointed in the wrong direction at organizations. Awareness of the risks is the most important element in addressing the human error element in data breaches today.

What is Generative AI

Generative AI is a type of artificial intelligence technology that can be used to produce various types of content, including text, imagery, audio and synthetic data.

The process is enabled by generative AI models which learns the patterns and structure of their input training data and then generates new data that has similar characteristics.

Generative AI have been adopted across a swathe of industries including financial services, publishing, software development & healthcare to name a view. Perhaps its most notable application is ChatBot type applications like ChatGPT and Bing and Text to image applications like Stable and DALL-E which can use deep learning techniques to generate image based on a natural language input.

AI Privacy Concerns

In some respects, Generative AI can be too effective when it comes to certain tasks. One such task is synthesizing data that resembles real data, raising concerns about the potential for re-identification. This may happen as a result of lack of testing and working with large data sets.

CHATBOTS

Chatbots may collect a lot of personal data, IP information and cookies when interacting with customers. There is a real risk that information collected may breach GDPR regulations on storing and transmitting sensitive PII and special category data particularly with public chatbots where organizations have less visibility over.

RIGHT TO BE FORGOTTEN

Implementing GDPR subject access requests such as personal data erasure is relatively straightforward with organizational databases. It’s more difficult to delete data from a machine learning model and doing so may undermine the utility of the model itself.

UNLAWFUL PROCESSING

Organizations such as Clearview AI have been fined by regulatory authorities for collecting millions of images and other personal data from online public sources such as Facebook for use in their AI powered identification products. Generative AI creates heightened risks in businesses that personal information is being processed and shared without knowledge or consent by data subjects.

LACK OF TESTING

Normally, high risk applications are subject to privacy impact assessments. Companies frequently overlook this step when considering using open-source AI which can be a significant risk.

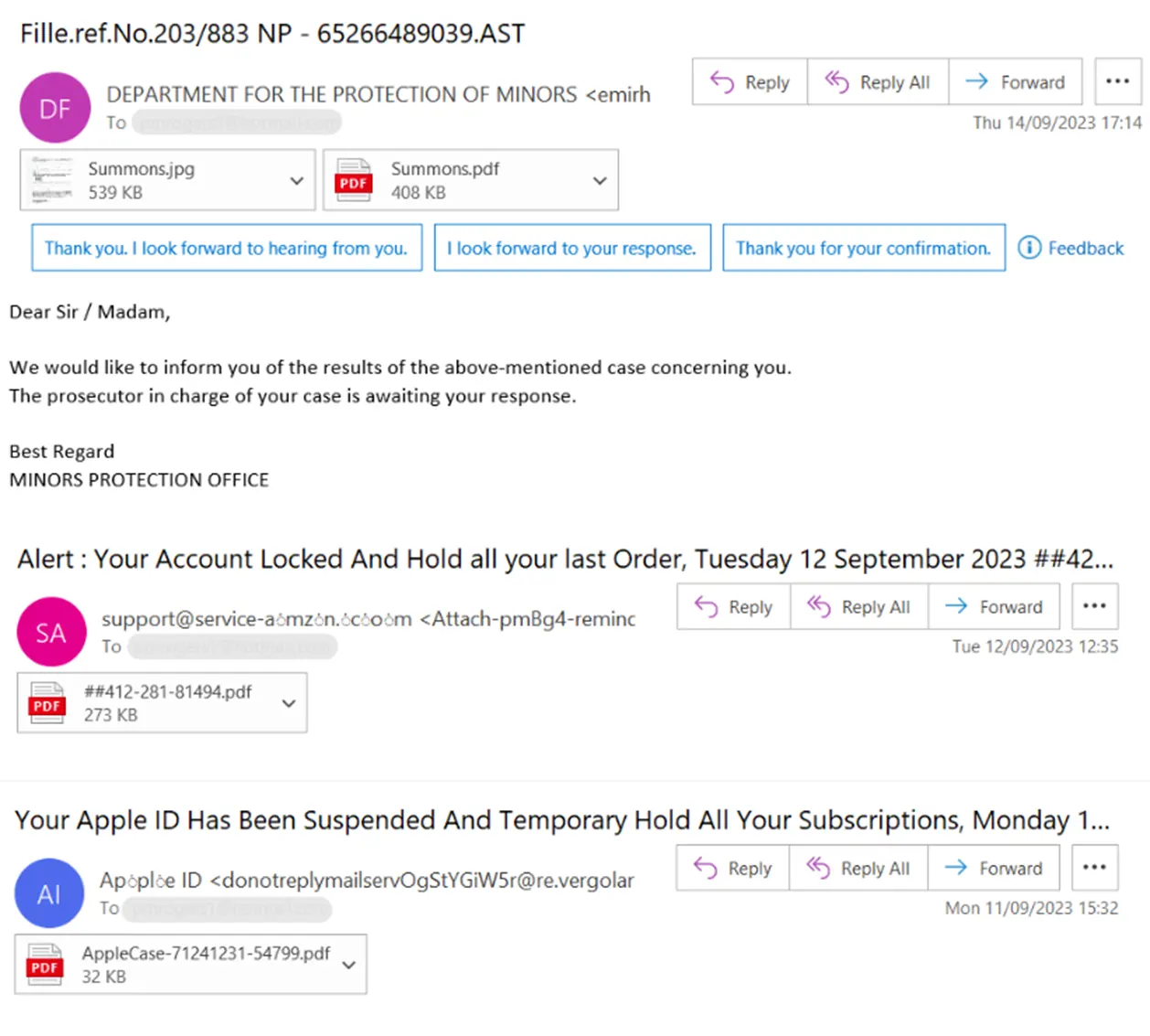

Advanced Automated Phishing Threats

The traditional model of phishing looks like the diagram seen here and described below.

Email Phishing : Garden variety phishing where a generic email is sent indiscriminately to people on a mailing list or a company domain.

Spear Phishing : A more target form of attack where known details about a recipient are used to get their attention and encourage them to act.

Whaling : Similar to spear phishing but focused on senior executives in the company, often with the objective of authorizing fund transfers.

Vishing : Where voice/VoIP calls/messages are used to attack victims. Such as voicemail with instructions to call your bank because of a security issue.

Smishing : Phishing based on text (SMS) messages.

SEO Phishing : When cybercriminals use search engine optimization to appear as the top results on a search engine in an attempt to lead searchers to a spoofed website.

Unfortunately, with the advent of AI, the speed of generated ‘fake’ emails and texts exceeds anything seen before. Access to the deep learning algorithms of AI are being sold as service on the black market for comparatively low monthly fees. This is giving access to a new generation of attackers armed with automated attack tools which will eventually find a victim who will click that link.

PDF Downloads

PDF Download Risks

PDF email attachments are now the most common form of introducing malware to organizations. With 2/3rds of attacks starting with a downloaded PDF attachment. Users may not be aware that malicious code, encrypted files and malicious URL links maybe embedded in PDF’s. Malware is often quietly introduced via this method causing huge impact on businesses in terms of business interruption, theft of customer data and investigations. Email users can easily be tricked as most PDF’s in the workplace are legitimate.

Identity Theft

Identity theft is a crime where someone steals your personal information and tries to impersonate you. In practical terms this means stealing information like credit card numbers, social security numbers, driver license no’s, medical, address and DOB information. Basically, any information that could be used to fraudulently obtain lines of credit, fake documentation or information.

While identity theft has been around for awhile, the new threat posed by deepfake images and video which can defeat online banking apps, generate fake medical claims and even employee onboarding tools is of next-level concern.

Various technologies and tools are being employed by social media companies and software companies to defend against this threat.

Technologies such as blockchain are being employed to verify the source of videos and images before allowing them onto media platforms. Adobe allows creators to create digital signatures when they create content while Microsoft is now issuing confidence scores as to whether the media has been manipulated.

Sensity offers a detection platform that uses deep learning to spot indications of deepfake media in the same way antimalware tools look for virus and malware signatures. Users are alerted via email when they view a deepfake. That said, there are still large gaps in organizations on the awareness front and the threats that AI will pose.

Next Steps

Training staff on the risks posed by AI, phishing, remote working and other threats in 2024 is a key preventative control against cybercrime. New threats are emerging which requires new training content to prepare staff for the very real risks. Discover our range of presentation training solutions and tools on our online store. We have low cost presentation training on Phishing,Generative AI Security ,Remote Worker Security Awareness Training, Data Privacy Awareness Training, Cybersecurity Awareness Training and more. Click the link below to visit our store or contact us on the contact us page to ask a question. We also build compliance training content programs for organizations, not just security related. e.g. code of conduct, AML, anti-harassment etc.